Abstract

Motivation

Flow Matching (FM) has revolutionized the training of continuous normalizing flows (CNF) by introducing a simulation-free approach. Recent works, based on optimal transport theory, simplifies the training objective by assuming a constant velocity field between data and noise. This simplification results in straighter probability paths compared to the high-curvature paths in diffusion models, making FM an efficient approach for training continuous normalizing flows (CNF).

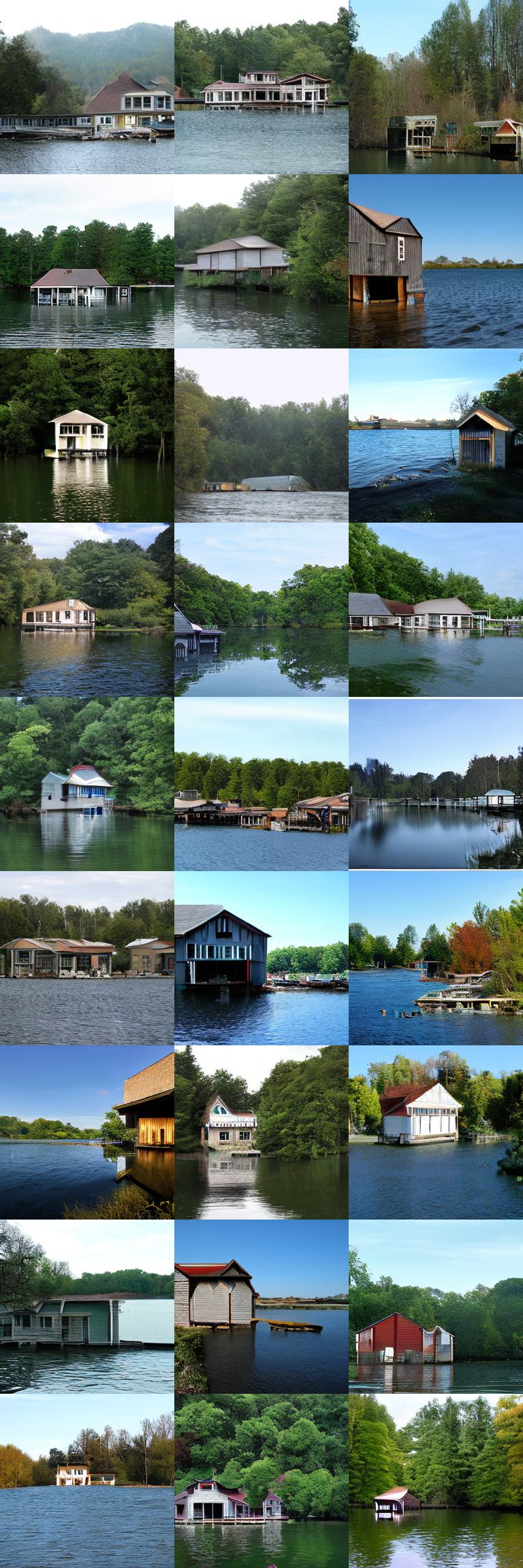

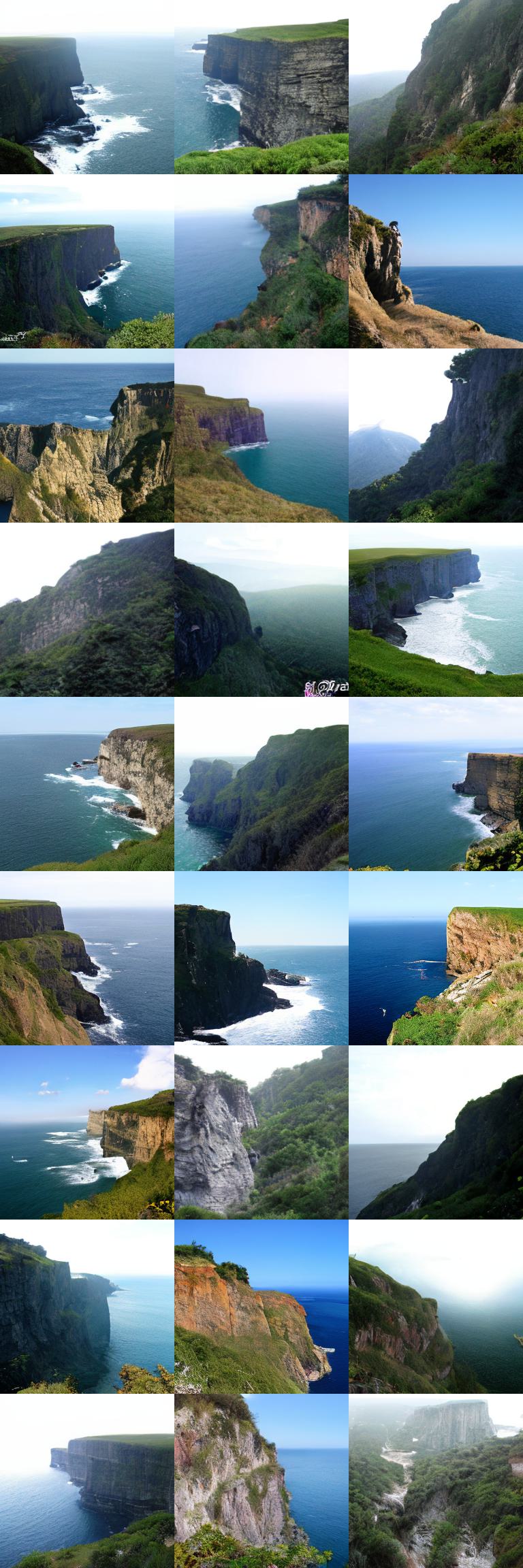

However, FM is still in its early stages and not yet ready for high-resolution image synthesis due to its costly ODE sampling process. Our approach represents a pioneering effort to thoroughly integrate and study latent representations for flow-matching models, with the aim of enhancing both scalability and performance.

Besides, the application of flow-based models in class-conditional generation remain unexplored. Hence, we introduce classifier-free velocity field, inspired by the concept of classifier-free guidance in diffusion models. Despite employing the same technique, our approach distinguishes itself by relying on a velocity field instead of noise, leading to a distinctive method for class-conditional generation. Additionally, our method supports different types of conditions, enabling tasks such as image inpainting and mask-to-image generation.

Why opt for the latent space?

Efficient computing: The most compelling advantage of the latent space is its compact representation, which enables smaller spatial dimensions. This property greatly benefits high-resolution synthesis by reducing the computational cost associated with evaluating numerical solvers at each step.

Expressivity: As found in prior latent-based diffusion models, training a generative model on latent variables typically lead to the improvement in expressivity, thereby enhancing model performance. Based upon these findings, we expect that our model, through the combination of FM and a carefully selected latent framework, will exhibit heightened expressivity.

As a result, the latent space empowers efficient training and sampling while enabling the generation of high-quality outputs.

Method

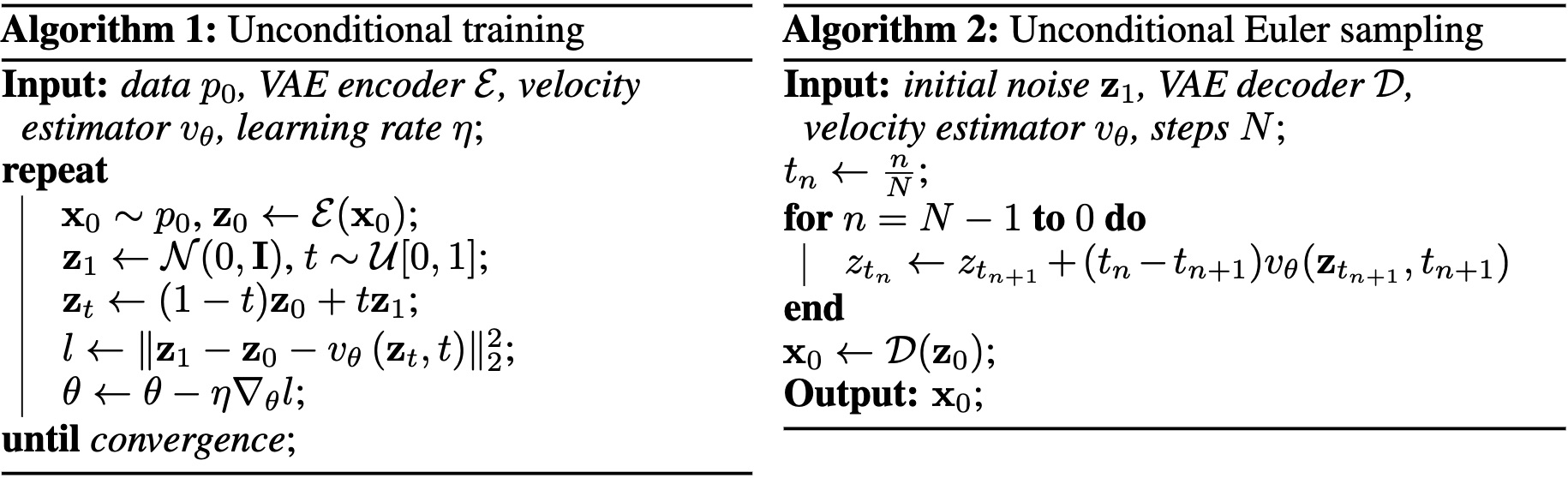

Unconditional model

The training and sampling procedure of unconditional model are described as follow. During training, the model is trained to estimate the transformation velocity from current state \( z_t \) to data distribution, which is constrained by the direct flow between data and noise \( z_1 - z_0 \).

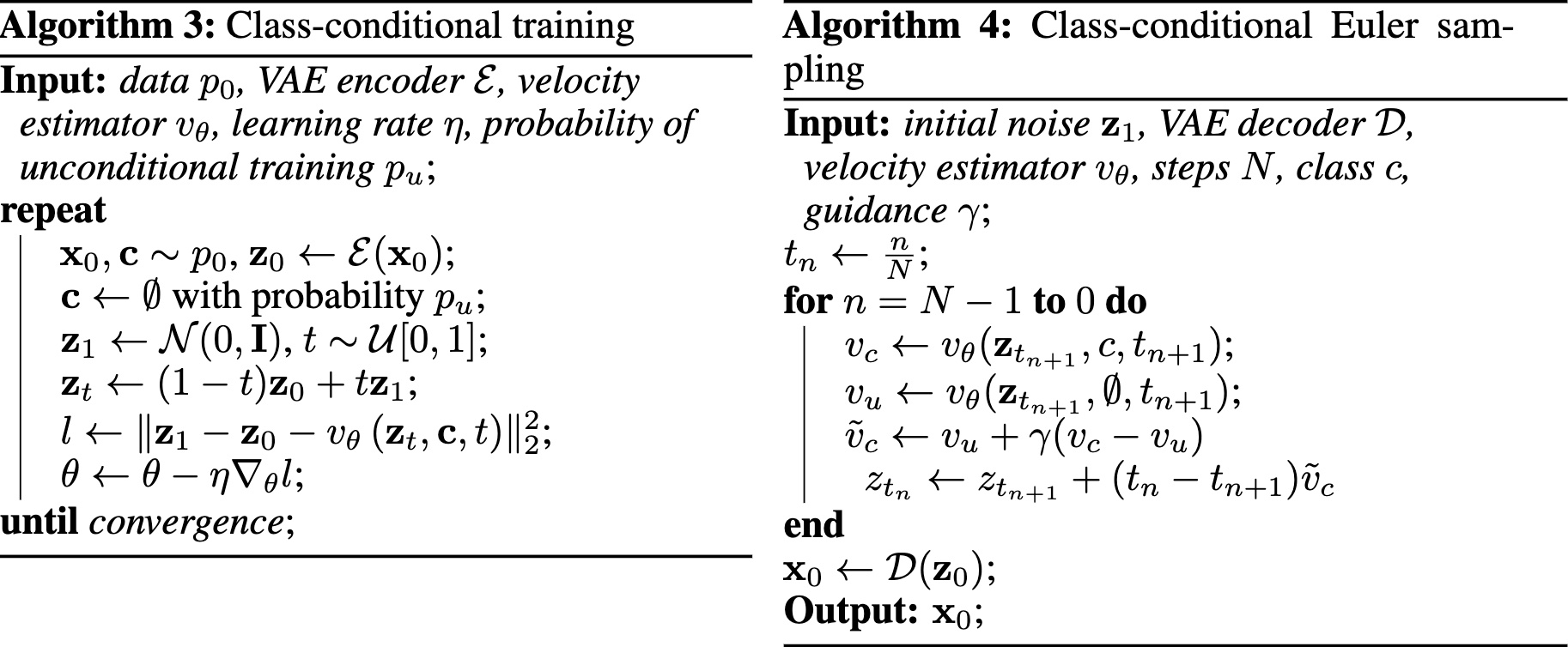

Class-conditional model

Unlike unconditional model, class-conditional model requires an extra class label in the training procedure. During training, we also incorporate the training of unconditional model with probability \( p_{u} \) (e.g. 0.1) to preserve generation diversity while alleviating the overfitting problem.

Downstream tasks

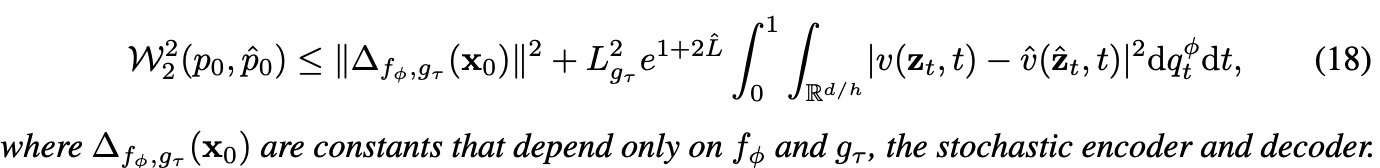

Theoretical analysis: Bounding estimation error

Related Works

- Yaron Lipman, Ricky T. Q. Chen, Heli Ben-Hamu, Maximilian Nickel, Matthew Le. Flow Matching for Generative Modeling, ICLR 2023 (Notable top 25%).

- Xingchao Liu, Chengyue Gong, Qiang Liu. Flow Straight and Fast: Learning to Generate and Transfer Data with Rectified Flow, ICLR 2023 (Notable top 25%).

- Arash Vahdat, Karsten Kreis, Jan Kautz. Score-based Generative Modeling in Latent Space, NeurIPS 2021.

- Robin Rombach, Andreas Blattmann, Dominik Lorenz, Patrick Esser, Björn Ommer. High-Resolution Image Synthesis with Latent Diffusion Models, CVPR 2022.

- Jonathan Ho, Tim Salimans. Classifier-Free Diffusion Guidance, NeurIPS 2021 Workshop on Deep Generative Models and Downstream Applications.

- Prafulla Dhariwal, Prafulla_Dhariwal1, Alexander Quinn Nichol. Diffusion Models Beat GANs on Image Synthesis, NeurIPS 2021 (Spotlight).

- William Peebles, Saining Xie. Scalable Diffusion Models with Transformers, arXiv preprint arXiv:2212.09748.

BibTeX

@article{dao2023lfm

author = {Quan Dao and Hao Phung and Binh Nguyen and Anh Tran},

title = {Flow Matching in Latent Space},

journal = {arXiv preprint arXiv:2307.08698},

year = {2023},

}